Following a recent post by A. Bouchez about an optimized CRC32 hash, I took it as an opportunity to re-run a small String Hashing Shootout on the worst hash function collision torture test I know: ZIP codes in UTF-16 (Delphi’s default String format).

Following a recent post by A. Bouchez about an optimized CRC32 hash, I took it as an opportunity to re-run a small String Hashing Shootout on the worst hash function collision torture test I know: ZIP codes in UTF-16 (Delphi’s default String format).

Contenders

- CRC32 using the SynCommons CPU implementation (based on Aleksandr Sharahov asm version)

- KR32 hash from SynCommons, based on Kernighan & Ritchie

- FNV1a hash from SynCommons, a canonical implementation of the Fownler-Noll-Vo hash

- SimpleStringHash from dwsUtils, a FNV1a derivative optimized for UTF-16

- BobJenkins hash from Generics.Defaults, the hash you will get with Delphi generics

Benchmark & Results

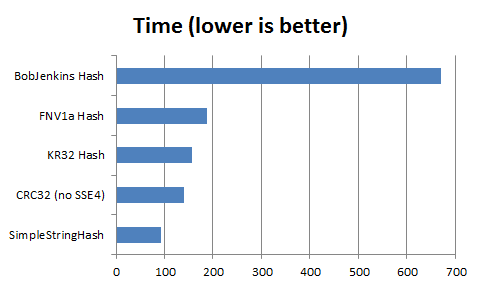

The benchmark consists in computing a 16 bit hash for all 5 digit numerical zipcodes, measuring the time taken and the number of collisions.

The time below is the lowest value from 30 runs of the benchmark.

Arnaud reports the SSE4 version to be twice faster if your CPU supports it (mine does not), so it’s same to assume SSE4 CRC32 would be the fastest hash of the benchmark. Though for short strings, brute force performance doesn’t matter too much, unless you’re relying on the Generics’s hash of course.

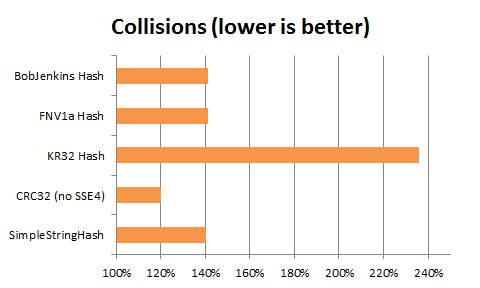

No for a look at the hash collision, a very important factor for use as the main hash for a hash table. Below are the results, with the collisions normalized as a percentage of a “perfect hash”, ie. a perfect hash would score 100%.

There is one obvious loser: the KR32 function. If you are using it, please just stop. It is both horrible in terms of collisions and not particularly fast.

There is one obvious winner with the CRC32, which pulls quite ahead of the pack, however it is doing a little too good.

The rest of the functions behave quite similarly in a pack, within a 1% spread (BobJenkins being last FWIW).

CRC32 is Too Good?

I tried a few cryptographic hash functions (MDS5, SHA1 & SHA256), they were all much (much) slower, but all got a 140% collisions score. This hints that 140% could be the practical optimum for a generalist hash on that dataset.

So there is definitely an oddity with the CRC32 hashing performance on ZIP codes. On that particular benchmark, it “works for us”, but there are probably cases in which it will not. Which cases those are, I don’t know.

I tried a some other datasets: random data, dictionary words, composed dictionary words… but the oddity did not re-appear.

On those other datasets, the benchmark results were similar: similar performance profile, similar collisions profiles with KR32 being the worst, BobJenkins trailing the pack of the “good” hashe, and cryptographic hashes taking the lead.

But for all of them CRC32 stayed in the middle of the pack in terms of collisions.

Conclusion

While CRC32 the ZIP codes benchmark pointed to an oddity. Other tests did not exhibit weakness, and CRC32 performed very well. But absence of proof of weakness is not proof of absence of weakness 🙂

Findings of good behavior were reproduced by others for other datasets.

CRC32 having been designed to be sensitive to 1 bit changes, and the ZIP codes dataset having relatively few changing bits (an UTF-16 zipcode is 80 bits long, but has less than 17 bits of data), it’s possible it is sort of a “best case” for CRC32 as a hash… or it is possible it is a “worst case” for CRC32 as a hash but we got lucky.

Note that there is an old myth about CRC32 not being suitable for hashing, originating in a 2008 paper (now offline), which was later found to be based on a bugged CRC32 test. So the oddity dicussed here is a different one.

Independent benchmarking and testing welcome!

Sorry, I can’t understand what do you mean by ‘cryptographic hash functions (MDS5, SHA1 & SHA256), they … got a 140% collisions score.” There are no known collisions for SHA1 & SHA256 today.

Hi.

The benchmark source code is available? Thanks!

@Serg it’s for a 16bit hash (64k buckets hashtable if you prefer), so only 16 bits of entropy are used out of the hash functions. The benchmarks is looking at collisions that could occur between elements taken from a dataset of 100k elements, so collisions are guaranteed.

@Alexandre source is trivial, and for better confirmations, starting from a blank slate would be preferable, but here goes http://pastebin.com/ETMH8tBc

Note that SimpleStringHash() is optimized for UTF-16 input, so will read the data by word, whereas all other hashing functions are working at byte level. So it is a bit “unfair”, even if of course, SimpleStringHash() is a perfect solution when working mostly on UTF-16 encoded text.

From our own tests, based on random UTF-8 content with an entropy similar to real human language tests, crc32 gives less collisions than the others, with a growing hash table size of powers of two, starting at 256 buckets.

Some numbers on our side, on Win32, hashing 10000 random strings, from 1 to 1250 bytes long.:

Hash32() is 2.5 GB/s (this adler32-family hash reads per dword, but has a lot of collisions)

fnv32() 715.5 MB/s

kr32() 0.9 GB/s,

crc32c() 1.7 GB/s (x86 asm)

crc32c() 3.7 GB/s (SSE4.2)

crc32() 330 MB/s (Delphi zlib)

Number under Win64 are lower, since we rely on “pascalish” version of the code, except for the crc32c() version on SSE4.2 CPU, which stays at 3.7 GB/s.

@A.Bouchez random strings are not a good way to stress a hash function, since on a random string, a hash function only has to preserve entropy and not distribute it (ie. no need for “avalanche” properties). Case in point, on truly random strings you could just take the first or last bytes and use them as “hash”, which would be even faster…

As I noted CRC32 worked just fine on a variety of other cases, but for ZIP codes, it had this oddity. Now, it’s a “good oddity”, but I’m wondering what is the mechanism behind it, and if/how it could become detrimental.

The hashing performance advantage of CRC32 could be quickly negated if you were to encounter a dataset which would trigger a “bad oddity”. In the same way as a naive QuickSort can suddenly become O(n²) on nearly sorted data and make an application/server seem stuck, a “bad oddity” could turn an hashtable into a linear scan table.

It would be interesting to test CRC16 then. If CRC32 was so good in producing 16-bit hashes on the given dataset the CRC16 could be even better.

@Serg would mostly be academic though, as CRC16 wouldn’t be able to handle hashes larger than 16bits…

Let me try… no it’s worse, meaning that the 16bits of CRC16 have less entropy than 16bits out of CRC32’s 32bits.

Non normalized collision counts:

– CRC32: 41408

– CRC16: 62144

– KR32: 81296

– others: 48xxx

Hard to explain it then.

For some reason CRC32 produces 16-bit hash distribution which is closer to uniform on the given dataset compared with other hash functions.

@Eric

As I wrote, the random strings used for our tests are not “perfect” random, but have “an entropy similar to real human language tests”. If, as you propose, I try to “just take the first or last bytes and use them as “hash”, then it results in a huge amount of collisions.

In practice, those strings compress with deflate with the same compression ratio than English or French text (I used a Bible as reference), or, when hashed with our functions, a similar collision count.

This is why I used those “not so random” strings in our tests, including regression tests.