This is a belated news update, with only the highlights:

This is a belated news update, with only the highlights:

Language News

- combined property/fields declaration is now supported (same syntax as Oxygene)

- dynamic arrays now support a Remove method, which has the same syntax as IndexOf, and can be used to remove elements by value

- for var xxx in array syntax is now supported, which combines a local, type-inferenced variable declaration and a for … in loop.

- unit test coverage is now at 97% for the compiler, 91% for the whole of DWScript

- various obscure bugs found and fixed

Script engine News

- script engine transition from stack-based to closure-based has begun, besides internal changes, the visible impact should be improved performance for objects, records, static arrays, var and const params has been improved

- full transition to closure-based engine (and support for anonymous methods and lambdas in the script engine) is pushed back to 2.4

Teaser News

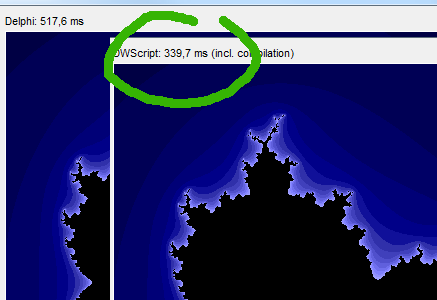

Also as way of a teaser, here is a screenshot related to something brewing in the lab… it’s from a Delphi XE app running the Mandelbrot benchmark (timings are for several runs), and the “DWScript” in that screenshot is the script engine.

Note that since this screenshot was taken, performance has improved, and the pony has learned new tricks 🙂

Eric, and when it will be possible to have a ride on this pony?

Sounds impressive! Hopefully it’s no April 1st joke 😉

I have currently the (for performance and other reasons) dcc32.exe as an internal “scripting” engine included in my personally used product and am thinking of replacing it in order to be able to distribute the software. So count me as a “follower” of DWScript.

Do you have a link that describes the difference between a stack-based and closure-based script engine? I am familiar with stack-based but not with closure-based.

A big thank you for your work! 😉

@Herbert Sauro The wikipedia article has some bits. Basically it means that when making a function call, instead of allocating space on the stack for the local variables, the engine would allocate space in the heap, you can think of it as having an implicit class per function, that is used to hold the local variables in its fields.

@Sergionn it’s in the SVN right now, the only case that is (almost fully) jitted is the mandelbrot benchmark, the rest of the tests suite passes, but with much more marginal jitting, and more tests will be needed anyway as the jitter will stress things in new ways. Current jitting is limited to simple local variables, floating point and some special cases of loops.

The JITter code, as available in SVN, is great.

Easy to follow and understand.

Pretty close to the JavaScript code emiiter, as far as I remember. 😉

I know this is a first attempt, targeting the mandelbrot benchmark, but I’ve some questions.

Do you plan to make a more “aggressive” use of integer registers (only xmm registers are used by now)?

Do you plan to target x64 also?

Why not directly set the memory blocks as PAGE_EXECUTE_READWRITE?

What about your initial plan of using LLVM as a back-end (I think it would make your development much faster/safer/open)?

I suppose having the possibility to emit some mixed source code + asm output text file could be very handy. Also for testing/regression purpose. What is nice is that some higher level optimization (like constant folding) directly benefit to your JIT.

But as a proof-of-concept, your new units clearly state that your JIT attempt is full of success, and worth working on!

@A. Bouchez

Some of the JIT code would not even compile under x64. I saw at least one case where a method used inline assembly as part of its body, which is a no-no in 64-bit land, AFAIK. Unless the rules have changed, a method has to be all Pascal or all ASM; the compiler won’t let you mix the two the same way you can in 32-bit Delphi.

@A. Bouchez The architecture is only similar in that you got one class per expression, otherwise it’s fairly different. Christian Budde is investigating LLVM, but that would be for a full compilation, rather than JIT. I’ve decided not to pursue the LLVM option for JIT at the moment, the several projects out there that use LLVM for JIT are faced with annoying issues (LLVM is huge and compilation is very slow for a JIT).

The memory blocks are set in two steps (write no execute, then execute no write) both as a security measure and a way to catch JITter bugs.

I don’t plan on a general purpose registers allocator (ie. outside of xmm and some special cases) at the moment for the 32bit JITter, but the 64bit JITter will have one. I started on 32bit JITter essentially because that’s the one where there was a need to complement Delphi (on floats), and because the Delphi 32bit debugger is more stable, but ultimately, the 64bit jitter should be the most extensive one (that’s the plan anyway).

@Mason Wheeler Those have now been segregated, the asm section are there to grab the VMT offsets, which are not accessible (easily) from regular code.

@Mason Yes, I also saw that the DWS code does not target

@Eric LLVM has two modes, i.e. compilation and JIT, and I understand that you do not want to use LLVM’s JIT for good reasons.

Did you take a look at the BESEN JITs? https://code.google.com/p/besen Its code generator is not so clean as DWS’s (there is no class per operation, just huge methods with a “case..of” switch), but it supports both x86 and x64 opcodes.

When I converted mORMot to x64, I was surprised with the Delphi XE3 64 bit compiler and debugger. Some SSE2 opcodes were missing at asm blocks level, the function calls is not documented besides the calling convention (e.g. I had to discover that a function returning a reference-counted result has to put it in the 2nd position!), official RTL code in System.RTTI.pas sounded broken to me (so was not a good reference)… but the IDE was pretty stable. I found some issues in the SQLite3 library itself, but none in the Delphi generated code. You can take a look at mORMot.pas to have some ideas about x64 calling convention use in Delphi.

But perhaps FreePascal/Lazarus may be a better solution, when targeting x64.

In all cases, I suspect the DWS JIT will be slower than the V8 or SpiderMonkey engines (not the same project size), but also with much less overhead and memory use. Our tests about BESEN shown that it was much slower than SpiderMonkey. DWS’s JIT may be at the same performance level. “In medio stat virtus”, as Thomas Aquinas said: DWS will target good execution speed and a fast JIT, and this is where object pascal is a major plus in comparison to other scripting languages (like Python or Ruby). And it has some advantages in respect to Go, for instance (like its true class model).

About performance, what about the possibility to use binary arrays at the language level (as we can do in modern JavaScript)? Is still in DWS an “array of integer” not a memory buffer, but an array of variants? How would DWS compete with Delphi/JavaScript when implementing compression or encryption algorithms on memory blocks? And also, what about mapping strings with memory buffers? I’m not talking about pointers here, just about some classes dedicated to fast memory access, both read and write, which may compile with a JIT into a one fast asm op code.

@A. Bouchez

Yes, I actually started with something similar to BESEN (not the massive case of, the copying of inlin asm section), the quickly switched to an “intrinsics light” approach. Those inline asm section also mean BESEN won’t compile in Delphi 64 AFAICT.

Iv’e given a try at FreePascal, but the CPU-level debugging in FreePascal is very primitive, so I’ll get back to it when everything will be rock-solid in Delphi.

And yes, V8 & SpiderMonkey have way more backing, but on the other hand, they start from untyped script, while DWS is stronly typed, so that reduces the complexity.

DWS is still using Variants, they’re on the way out, but the road is still long since I don’t have time for a major shake up, so it’s step by step.

For fast low-level buffer, it should already be possible: the magic functions can have dedicated inline JIT (cf sqrt, min & max), so you could currently design a set of magic functions to make that possible. Those could then later be “wrapped up” as type helpersif you want an object syntax. That could also allow to support SIMD intrinsics in the script.

@Eric

Intrinsics is a great feature… very low level, but may make the difference, when performance is mandatory!

But the best approach should be some dedicated types, I suppose.

From the performance point of view, Variants are somewhat a problem, but if JIT is possible, it should not be one any more, due to DWS strong typing.

Very good work!

@A. Bouchez Even in the regular script engine, variants are often used as memory containers, rather than variants (using pointers to access the strongly typed data). However variants are used to handle memory management of managed types (strings & interfaces/classes), so before they can be eliminated, that memory management has to become explicit in the engine.

Eric, did you try the Delphi’s Mandelbrot in x64 mode?

With {$EXCESSPRECISION OFF} – see http://www.zhihua-lai.com/acm/delphi-xe3-excessprecision-off/

Just to compare with the new SSE2 generated opcodes on Win64 by newer Delphi versions.

@A. BouchezYou mean that switch? 😉 http://delphitools.info/2011/09/09/happy-excessprecision-off/

Though in that case it doesn’t matter, as the computation are double-precision (single precision isn’t enough for the Mandelbrot).

And yes, I did try them, the JITter stands about halfway between Delphi 32 FPU code and Delphi 64 SSE2 code in performance, and if you remove the SetPixel call (for which the JITter has a redirection overhead) and leave only the pure maths, then the JITter comes within 25% of Delphi 64 (most of which comes from an extended SSE2 instruction in 64bit to convert an integer to a float, which isn’t available in 32bit, and so the JIT in 32bit incurs a conversion overhead).

Next step in the “Proof of Concept” will be SciMark.