I recently dusted off an artificial neural network project, now published at https://bitbucket.org/egrange/daneuralnet/ [1]. This is a subject I’ve been dabbling on and off since the days of 8 bit CPUs.

I recently dusted off an artificial neural network project, now published at https://bitbucket.org/egrange/daneuralnet/ [1]. This is a subject I’ve been dabbling on and off since the days of 8 bit CPUs.

The goals of the project are twofold: first experiment with neural networks that would be practical to run and train on current CPUs, and second experiment with JIT compilation of neural networks maths with Delphi.

TensorFlow and Python are cool, but they feel a bit too much like Minecraft, another sandbox of ready-made blocks 😉

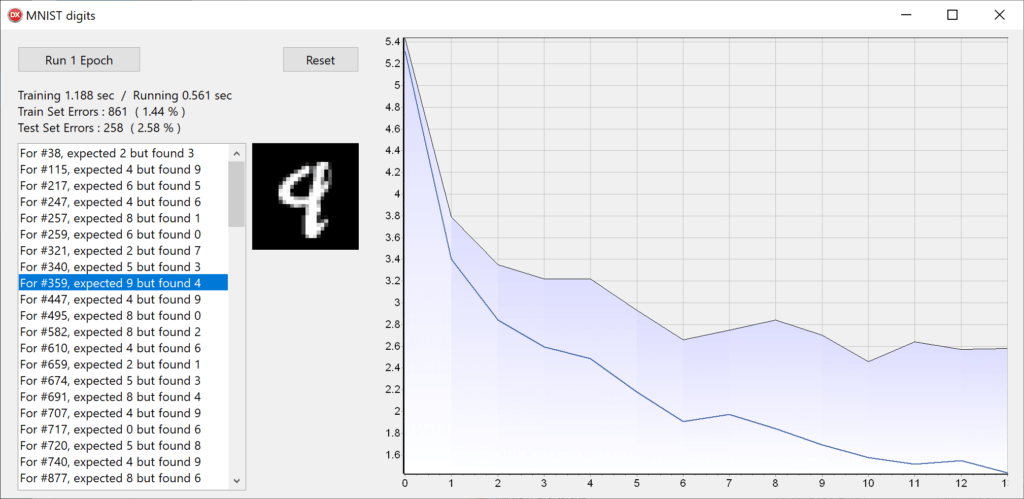

The repository includes just two classic “demos”, and XOR training one, and another with the MNIST digits [2] which is mostly useful for benchmarking maths routines, the dataset itself .

There are dependencies to the DWScript code base (for JIT portions), and the code is intended for Delphi 10.3 (uses modern syntax). This will also serve to kick-start the 64bit JITter for DWScript (stuck in limbo) and a SamplingProfiler 64bit version (stuck in limbo).

The video below shows the output of the Neural Network while training to learn the XOR operator. Input values are the (x, y) coordinates of the image normalized to the [0..1] range.

The MNIST dataset demos trains a small 784-60-10 network, charts the progress and allows to check digits for which the recognition failed. Training time is over the whole 50k samples data set using Stochastic Gradient Descent, using a single CPU core.