Work and processing classes are typically short-lived, created to perform one form of processing or another then freed. They can be simple collections, handle I/O of one kind of another, perform computations, pattern matching, etc.

Work and processing classes are typically short-lived, created to perform one form of processing or another then freed. They can be simple collections, handle I/O of one kind of another, perform computations, pattern matching, etc.

When they’re used for simple workloads, their short-lived temporary nature can sometimes become a performance problem.

The typical code for those is

worker := TWorkClass.Create; try ...do some work.. finally worker.Free; end;

This code can end up bottle-necking on the allocation/de-allocation when

- you do that a whole lot of times, and from various contexts

- you do that in a multi-threaded application and hit the single-threaded memory allocation limits

Spotting the Issue in the Profiler

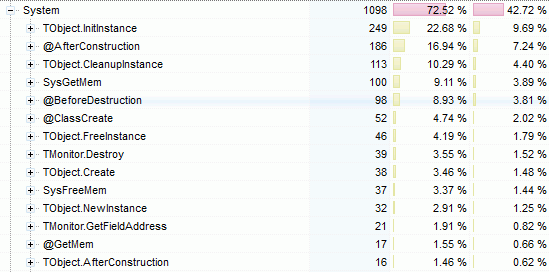

If you use Sampling Profiler [1], you’ll know this because your profilling results wil look like this

with TObject.InitInstance, @AfterConstruction, CleanupInstance, etc. up there, you just know you’re hitting the RTL object allocation/de-allocation overhead.

In the case of a single-threaded application, the RTL will often be the bottleneck, the memory manager is there but not anywhere critical. For more complex classes with managed fields, it will involve even more RTL time, and that will be in addition to your own Create/Destroy code.

In a multi-threaded application, you may see SysGetMem/SysFreeMem creep slowly toward the top spots.

Context Instance and Object Pools

A simple “fix” would be to create the work class once, then pass it around directly or in a “context” class (ie. together with other work classes). However, that isn’t always practical, either because the “context” isn’t well defined, or it would expose implementation details, or you just don’t to want to pass around a context parameter that would act as spaghetti-glue across libraries.

Another alternative is to use a full-blown object pool [2], but that usually involves a collection, and if you want to be thread-safe, it means some form of locking or a more complex lock-free collection, all kind of things which may not just be overkill, but could leave you with a complex pool that doesn’t behave much better than the original code for simple work classes.

Enter the mini object-pool, which is no panacea, but is really mini, thread-safe and might just be enough to take care of your allocation/de-allocation problem.

Next: Show me the code! The Mini Object Pool. [3]

The Mini Object Pool

The idea of the mini-pool is that it’s really mini: it holds by default at most… one entry!

But that allows it to be lightweight and involve just a cheap atomic lock through the InterlockedExchangePointer function for thread-safety.

Here is a minimalist implementation:

var

vPool : Pointer;

function AllocFromPool : TWorkClass;

begin

Result := InterlockedExchangePointer(vPool, nil);

if Result = nil then

Result := TWorkClass.Create;

end;

procedure ReturnToPool(var obj : TWorkClass);

begin

if obj=nil then Exit;

obj.Clear; // or whatever restores TWorkClass to default state

if vPool=nil then

obj:=InterlockedExchangePointer(vPool, obj);

obj.Free;

end;

This is quite simple, but already when applied to a very simple class like TList, the allocation/release cost can be divided up to 15 times if you use the InterlockedExchangePointer from the Windows unit, and up to 40 times if you use the one from the dwsXPlatform [5] unit.

Ideally, rather than isolated functions, you’ll probably want to use a static class function for AllocFromPool, and a regular method for ReturnToPool (cf. f.i. the TWriteOnlyBlockStream class in DWScript [6]‘s dwsUtils [7] unit).

Just don’t forget to add to your unit finalization clause something like

TObject(vPool).Free;

or you’ll be leaking one instance at shutdown.

Previous: Mini-Pool sample code. [3]

Scaling it Up

Sometimes the one-instance pool won’t be enough in a multi-threaded situation, or if the work classes can be allocated recursively (i.e. when you need several at the same time), at which point the mini-pool may progressively degrade to toe the original allocation/de-allocation bottleneck (always measure nonetheless, you may be surprised by the resilience)

The InterlockedExchangePointer strategy is however about twice more efficient than a critical-section approach, and less prone to contention and serialization effect, so here are a few directions to make the mini-pool scale, without introducing much complexity:

- use a threadvar for the vPool variable, so that you get one mini-pool per-thread. You’ll have to clean up the pool manually though when your thread terminates.

- use several variables and a statistic index, based on GetThreadID or an InterlockedAdd, this can work quite well in both threaded and non-threaded applications, and the cleanup can still happen in the unit finalization

For the second approach, if you want to minimize contention, declare your pool like

type

TCacheLinePointer = record

Ptr : Pointer;

Padding : array [1..CACHE_LINE_SIZE-SizeOf(Pointer)] of Byte;

end;

var

vPool : array [0..POOL_SIZE-1] of TCacheLinePointer;

with CACHE_LINE_SIZE at 64 or 128, POOL_SIZE a power of two, and then instead of accessing vPool directly, use vPool[index], with index computed like

index := (GetThreadID and (POOL_SIZE-1));

While the improvement is all statistical and not guaranteed, the code stays very simple, lock-free, can scale like there is no tomorrow, and guaranteeing the pool’s cleanup is much simpler than with threadvar.

Generic Troubles

Now, the temptation might be to make a generic version of that code, but alas, it’s one case where the idea doesn’t seem to turn out so well, as you need to be able to cleanup the instance before returning it to the pool (call to Clear in the code above).

With Generics, it’ll mean an interface constraint, which means reference-counting, which will interfere with the pooling and release… An alternative would be to have all your work classes derive from a base class, but that can be limiting as your generic would be not-so-generic, or might involve other forms of overhead if you wrap the work class, which could negate the benefits of the mini-pool.

What would really work in the above case is a template 🙂