I recently bumped on a post by François on FieldByName [1] performance, and was bit surprised by the magnitude of speedups reported by Marco Cantu [2] in a comment:

” I keep fixing similar code I see my clients use, and in some case the performance can increase 5 to 10 times, for large loops. Good you are raising this problem. ”

We have similar-looking code being used here in our datasets (which aren’t TDataset-related at all however), and yet, repeatedly looking up fields by name isn’t a performance issue (it hardly registers in the profiling results, even in worst case situations, like in-memory SQLite [3] DBs).

By curiosity, I had a look at DB.pas… Suffice it to say that the VCL code make a good case study of why a Profiler is your friend, and what “out of thin air” optimization can lead to.

The FindField case of Unicode comparisons

The DB.pas code being copyrighted, I won’t post any excerpts here, but you can find it easily enough yourself, so I’ll just describe what happens.

FindField’s purpose is to find a TField by its name, in a case-insensitive fashion.

A naive implementation would thus look like that:

for i := 0 to FFields.Count-1 do begin

Result := FFields[i];

if AnsiCompareText(Result.Name, FieldName) = 0 then

Exit;

end;

Result := nil;

I then made a quick benchmark, consisting of a three cases:

- “best” case consists in finding the first field

- “worst” case consists in finding the 20th field

- “all” case consists in finding 20 fields out of the 20 fields

Field names were like “MyFieldName1”, “MyFieldName2”, etc. up to “MyFieldName20”. You’ll note that the differencing characters are at the end of the string, so the situation is quite unfavorable in terms of string comparisons, but neutral if you were to hash those strings f.i.

Also keep in mind I’ve just got a recent CPU (at the date of writing), and the timings afterward are for 100k lookups. On a regular end-user machine, you could probably double or quadruple the figures.

With the naive implementation, the “best” case performance is 19 ms, “worst” case 400 ms, and “all” is 210 ms.

This is quite lengthy, as with Unicode, case insensitive comparison (AnsiCompareText) is quite complex and expensive time-wise. There can be an obvious performance issue with FindField if used in a loop.

Case study of an optimization gone wrong

To cut down on that complexity, the VCL implementors chose to go for a hash. A risky choice to begin with: a hash has to be good enough to limit collisions, it has to be computed fast enough to actually bring a benefit, and last but not least, it results in sometimes complex extra code (and here you need a case insensitive hash, a plain old binary hash won’t do).

So the VCL code goes on to compute a hash for each of the fields, and alters the naive implementation above by checking the hash before performing the AnsiCompareText, doing the comparison only the hash matches. So far so good, eh? Well, here the trouble begins.

First, the VCL code is still facing one AnsiCompareText per FindField hit, plus an AnsiUpperCase (which is almost as expensive) to compute the hash.

Second, the TNamedItem.HashName implementation is a collection of “don’t”, look for yourself in the code:

- it includes a custom GetStringLength inline, to cut down on the access to the string character count probably, access which happens twice in the implementation (and which a profiling would have revealed to be negligible, especially in light of the following)

- since the introduction of FrankenStrings, a String can hold Ansi as well as UTF16, and the hash is computed on an UpperCase’d UTF-16, so you’ve got extra conversion code in there, in case it is Ansi, including dynamic allocation to serve as buffer for UnicodeFromLocaleChars, which is invoked no less than twice

- in case the String is already UTF-16, a buffer is still dynamically allocated, and the AnsiUpperCase’d name copied to it, I guess that’s to preserve the “efficiency” of the loop that computes the actual hash…

- then comes the hash computation loop, that was obviously where the optimization effort went, it works on a PChar, does a ROL and XOR, and it is certainly efficient, except that a mere profiling would have shown its efficiency didn’t matter, unless your field names are several hundred characters long…

The VCL implementation has a “best” case performance of 42 ms (2 times slower than naive), a “worst” case of 50 ms (8 times faster than naive), and “all” of 46ms (5 times faster than naive).

Thing is, you likely won’t often have 20 fields in your queries, and the VCL implementation needs at least 3 fields to pull ahead of the naive implementation. Given the size and complexity of the VCL code involved, I would say that’s quite an under-achievement.

Last but not least, if you profile the VCL code, you’ll see that HashName, a whole bunch of memory allocation and string management code from System.pas are quite stressed, given the above, that’s not too surprising, but that means performance in a multi-threaded situation will only get worse.

Doing it the efficient way

Let’s do it with the help of a profiler [4], and a bit of laziness.

Initially AnsiCompareText is the obvious, overwhelming culprit the profiler will point to in the naive implementation, there are two roads from that point:

- optimizing AnsiCompareText, this is complex, involves quite a bit of code, and we’re lazy, remember?

- the fastest AnsiCompareText is the one you don’t do, that’s the lazy road.

How to not do the AnsiCompareText?

One reason there are so many of them in the first place is that there is a loop on the fields. And when optimizing, loops are good, they mean you’ve got big O [5] optimization potential, and big O optimization is how you achieve orders of magnitude speedups.

In this case, it’s a simple O(n) string search loop, for which one of the classic optimizations is a reduction to O(ln n) using binary search. That however requires an ordered list, and the Fields list isn’t sorted, and can’t be sorted.

So we need a good old fashioned index.

One such readily available index is good old TStringList, with Sorted set to True: place the field names in the Strings[], the TField object in the Objects[]. Use IndexOf() to find a field. That’s all. You have reduced the AnsiCompareText from O(n) to O(ln n).

// after filling up or altering the Fields FIndex.Clear; // FIndex assumed created, set to sorted, case insensitive for i := 0 to FFields.Count-1 do FIndex.AddObject( FFields[i].Name, FFields[i] ); ... // Find a field with i := FIndex.IndexOf( fieldName ); if i >=0 then field := TField( FIndex.Objects[i] ) else field := nil;

With the above code, on a 20 fields situation, the best/worst/all cases benchmarks around 78 ms, which is coherent with the O(ln n) expectation (there are no best or worst case).

A quick look in the profile reveals AnsiCompareText is still the overwhelming bottleneck, instead of being the one in our code, it’s now the one in the TStringList.CompareStrings. The profiler tells us the AnsiCompareText is the key, no need to worry about optimizing Length() 😉

How to go further from there?

We are O(ln n), could we go to O(1)? That would involve a hash list, which means a hash, a case-insensitive hash. We don’t have one handy, they’re complex, this is not lazy. Also a hash list can be costly memory-wise, we don’t want a huge setup for what could be a two-fields-dataset affair in practice.

The fastest AnsiCompareText is the one you don’t do… so, do we really need AnsiCompareText? No.

Why? Because we only really need to index the names that are looked up, and FindField is troublesome when it’s invoked in a loop, looking up the very same name strings again and again, ad nauseum.

Rather than indexing the field names, we can index the field names that are actually looked up, but this time in a case sensitive TStringList, thus changing our index to a cache of sorts:

// after filling up or altering the Fields

FIndex.Clear; // FIndex assumed created, set to sorted, case sensitive

...

// Find a field with

k:=FIndex.IndexOf(FieldName);

if k>=0 then

Result:=TField(FIndex.Objects[k])

else begin

// not in index, find it with naive implementation

Result:=nil;

for i:=0 to FFields.Count-1 do begin

Result:=FFields[i];

if AnsiCompareText(Result.Name, FieldName) = 0 then

Break;

end;

// add to index

FIndex.AddObject(FieldName, Result);

end;

After benchmarking, however, there is no speedup… A look at the profiling results shows that TStringList.CompareStrings is still the bottleneck, this time because of AnsiCompareStr… is there no end to them slow Unicode functions???

Why is AnsiCompareStr slow? in part because in Unicode the same character can be encoded differently, and in part because the WinAPI implementation is just plain no good.

In our case however, the Unicode encodings details don’t matter, it’s a cache, the ordering is meaningless as long as it is consistent, so we can just subclass TStringList and override CompareStrings:

function TIndexStringList.CompareStrings(const S1, S2: string): Integer; begin Result:=CompareStr(S1, S2); end;

In Delphi XE, CompareStr() is fast, it is still based on Pierre le Riche’s FastCode version (but who knows what will happen to it in Delphi 64 where there is no BASM? but I digress…).

Wrapping it up

The new benchmark figures are now around 8 ms, in all cases. That’s five times faster than the VCL’s best case with a 20 fields dataset, and it scales nicely with field counts, as we’re still O(ln n). Just to illustrate:

- with 3 fields: VCL takes 42 to 45 ms, our code 4 to 5.6 ms

- with 100 fields: VCL takes 42 to 81 ms, our code 10 to 18 ms.

This is also achieved with a lot less code than the VCL, no asm or fancy tricks, and we achieved speedups of the same magnitude as what Marco Cantu reported, what François and all TDataset users have to labor for by optimizing on the spot every time they have a loop on a dataset.

Is there some more fat to be trimmed?

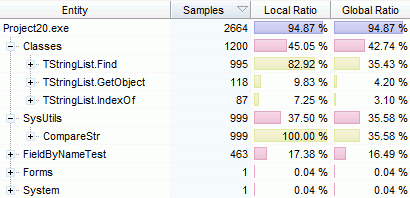

The final profiling of the benchmark look like that:

[6]16.5% are lost to the overhead (a simple loop that calls FindField repeatedly), we can assume CompareStr is optimal enough, the rest is spent in TStringList methods which are decently implemented.

[6]16.5% are lost to the overhead (a simple loop that calls FindField repeatedly), we can assume CompareStr is optimal enough, the rest is spent in TStringList methods which are decently implemented.

As a bonus, you’ll notice there is no noteworthy stress on dynamic memory allocation, meaning things will scale all the better when multi-threading.

As an alternative to TStringList, you could wonder about the new generic TDictionary<>, if you’re feeling adventurous.

However, you wouldn’t be rewarded for your risk, as it’s slower than the solution exposed here (up to 5 times slower when there are few fields, and slightly slower when there are hundreds of fields). A look at the profiler shows it wastes most of its time… computing the hash. Its memory overhead is also quite higher and would probably bite in a multi-thread situation (I’ll let the astute reader figure out why the TStringList approach gets away with very little memory allocation).

The optimization is done.

You could of course go further, there are a couple low hanging percents to grab, but to really improve, it would involve libraries or extra code that would go beyond the scope of an isolated optimization case such as this one, IMHO.